Epilogue of Tutorial 1

1. Party epitome

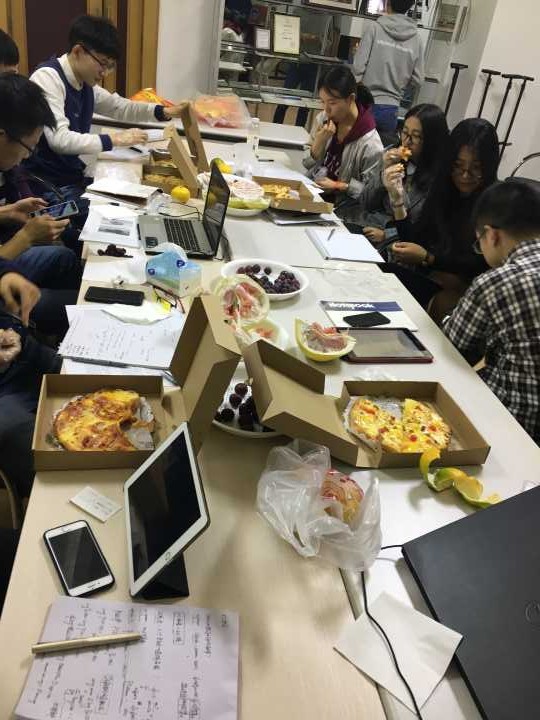

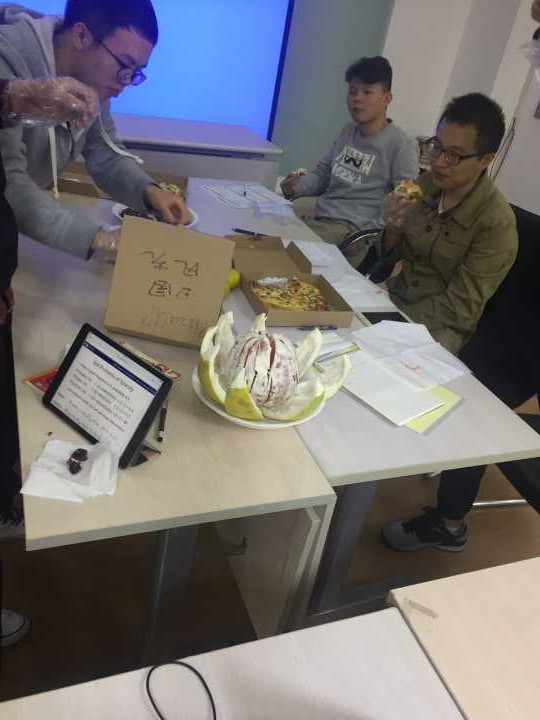

Our 1st evening tea party is taken on 7:00 PM September 29. To me, it is a very lovely night, since everyone enjoys the atmosphere of chatting with nearby, white-board discussion and pizza-right-fruit-left. What a pity that we haven’t taken any (wtf!? My fault…) photos as a “serious” proof of the statement in the previous sentence (are you kidding me?!). Ah hah! Let us enjoys the beautiful cartoon images of three of our speakers.

During the first part of our party, Shucun (恕存) talked about his understanding of the god-damn Lagrange multiplier method of prove the MLE of count-based n-gram language models. He use a very “nice” example: “A dog a dog” to demonstrate the following formula. He is really brilliant!

\[\log P(s) = \sum_{\forall w_i, w_j \in \mathcal{V}} Count(w_i, w_j \vert w_i, w_j \in s) \cdot \log P(w_j \vert w_i)\]

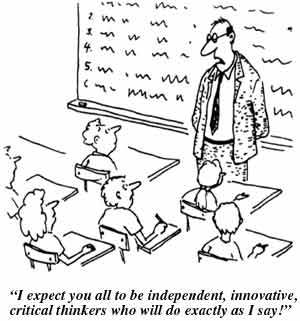

The above picture is Shucun as a instructor talking to us all. He appears to be a very mature gentleman hah and tries to convince us with big words!

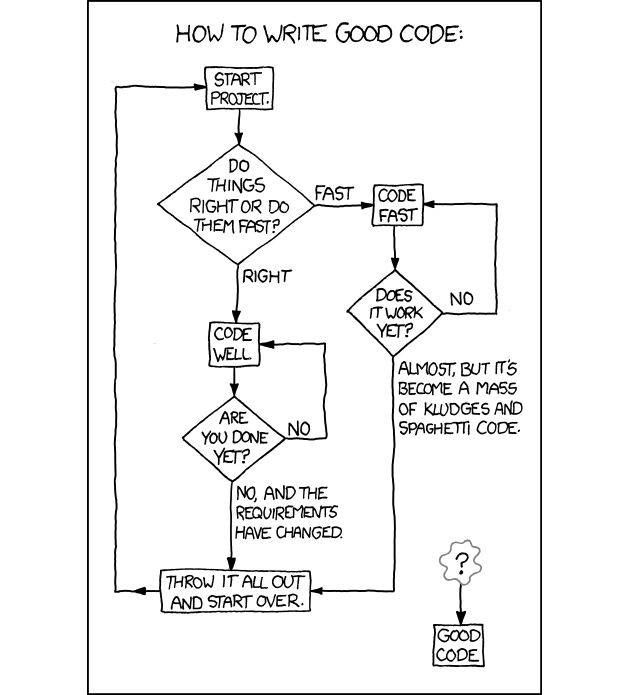

The second part of our party belongs to Hangbo (航波), who is good at programming and has strong computational thought. Look at him in the following image. You may say, “Wait a minute? Where on earth is him?” Don’t worry, he has become the flow plot which can represents his mission on getting program neat, clear and high-speed! [\Facepalm]

The last part of our party is the most excited time. Our Heng (阿恒) organized some issues and questions for us to discuss over the paper “Two decades […]”, which is a very well-written survey in 2000 on different aspects and promising directions of statistical language modeling. Heng talked on many topics, like the his understanding of learning linguistic structures and facilitate latent variables under the surface to help improve the predictive power of language modeling. He also talked about domain adaptation of LM and gave us two commonly used tricks to do transfer learning, bravo!

Find him in the above picture. Wow! It is amazing that he has contributed that early in the field and become one of the 3-big heads of SLM! Congratulations to him.

2. After-party chat about pros & cons

[TO-DO] This part have not yet been organized to be posted. Waiting for interviewing everyone.

Appendix

A.1. Topics

The following table summarize some topics our members are trying to work or working on. Find your mates and discuss with him about your topics.

| Name | Topics you have to work on | Topics you like |

|---|---|---|

| 马晶义 | University Admission Chatbot | QA, Dialogue |

| 吴焕钦 | NMT, QE for MT | NMT |

| 田恕存 | Text classification | Domain Adaptation |

| 龚恒 | Text Generation | Seq2seq Model |

| 侯宇泰 | TBD | TBD |

| 陈双 | Text Generation | Seq2seq Model |

| 胡东瑶 | NLP for law big data (Entity recognition, text classification, etc) | Embeddings, seq2seq |

| 赵笑天 | History Knowledge Based Chatbot | QA |

| 白雪峰 | Cross-lingual word representation, GAN | NMT |

| 李冠林 | NMT, Discourse Relation Classification | Interactive, Representation Learning, Causal Reasoning and Deep Generative models |

| 王贺伟 | Text Classification | QA |

| 张冠华 | None | Machine Learning in General |

| 杨奭喆 | None | NMT |

| 鲍航波 | None | Text Generation |

| 赵晓妮 | Sentiment Analysis | Sentiment Analysis |

| 王瑛瑶 | None | NER and relationship extraction of biomedical text |

| 邓俊锋 | None | Statistical Learning |

| 唐成达 | None | RNN |

| 张丽媛 | None | NLP in General |

A.2. Real images